Algorithms in the judiciary

- Sandhya

- /

- The DAKSH Podcast /

- Algorithms in the judiciary

Show Notes

-

Support us by Donating.

-

Chitranshul Sinha, The Great Repression India, Viking 2019

-

IshitaPande, “Phulmoni’s body: the autopsy, the inquest and the humanitarian narrative on child rape in India.” South Asian History and Culture 4.1 (2013): 9-30.

-

Queen-Empress vs Hurree Mohun Mythee (1891) ILR 18 Cal 49 https://indiankanoon.org/doc/1410526/

Hi, I’m Sandhya and welcome to the DAKSH podcast. Courts remind us of paperwork, and more paper work. Have you thought about technology in the courts? Imagine if courtrooms in India use technology, or even artificial intelligence, redacted. And no, I don’t mean having robots as judges.

In today’s episode, we are going to discuss the usage of technology tools in the judiciary. So, in what way can technology help our justice system? Firstly, we have to understand that it presents both opportunities and challenges. Good news. First, what are those opportunities? There are more to courts than arguments and decisions. Right from the time a case is filed a long list of administrative tasks follow. This includes checking the cases file for errors, preparing a day wise schedule to list the cases in various courtrooms, and much more. Each of these processes can be automated. And this presents us with an excellent opportunity to help our courts function better. Why are we talking about these administrative tasks? What makes them so important? And why should we discuss the technology used to implement these tasks? One of the most important principles governing the Indian justice system is open justice. This principle means that each and every step in the process of resolving cases, and the administration of our justice system should be open, transparent and accessible to the citizens. It translates to making all courts open in the true sense of the word. This is why even the technology we use in these tasks should be open, transparent and easy to understand. Is there a need for us to be obsessed with what technology we use in these tasks? Does technology impact certain aspects of open justice? Yes, and yes. International examples provide us with great insights on how some technology applications can impact open justice. For instance, in the US a technology tool called correctional Offender Management profiling for alternative sanctions, commonly known as compass, was used briefly to decide on matters of Bail. It was later revealed that this tool was not fit to be used, as it was reinforcing racial bias. How did this happen? The answer is simple. We build tools using existing information. And if the information is filled with patterns of bias, it will easily be replicated when we build these tools. Such unintentional consequences can impact open justice and can eventually lead to lack of trust in the justice system.

What other challenges will our justice system face when we start using tools in admin tasks? For example, if an algorithm starts to plan and schedule administrative tasks, such as what cases are to be heard, and when it is possible that the tools efficiency is only as good as the training data one feeds while building this tool? Training data consists of core tools and other parameters we want the algorithm to consider while we build it. So there is a good chance that this data can lead to the algorithm repeating existing problems within the system, or worse escalating the problem further. does all this mean we should not venture into using any of these tools? Absolutely not. Like every new intervention, whether technological or not, these tools come up with its own challenges. Technology is not inherently good or bad, but its usage should be monitored and regulated. Why? Because if any of these tools cause bias, it may lead to disastrous consequences. We have to be cautious because the judiciary is built on principles including open justice, fairness, due process and recent decisions. Nothing is perfect. So allowing the use of technology in some of the judicial tasks should come with the acceptance that there will be a trial and error method to some of the tasks. What are other countries doing? Some countries favor a generous approach to technology tools in a bid to increase efficiency, while others prefer to use such tools cautiously. The impact of technological changes can create avenues for improvement in the dispensation of justice. On the other hand, any incorporation of technology should be done in a manner that allows for us to monitor the impact. While efficiency is a learning ideal, we will have to ensure that the trust in the system is not eroded. This can only be accomplished by constant vigilance, we have to study and monitor the implementation of technology tools in the judiciary. This vigilance is important to maintain the balance between efficiency and other fundamental principles of the justice system, including fairness and open justice. Thank you for listening to this short episode. If you’d like to know more about this topic, visit our website www dot DAKSH India dot O RG and read our paper algorithmic accountability in the judiciary. If you enjoyed the episode, do consider supporting us with a donation. The link is in the show notes below. Creating this podcast takes effort and Your support will help us sustain a space for these quality conversations. To find out more about us and our work, visit our website DAKSH India dot O RG that’s D A K S H India dot O RG don’t forget to tap follow or subscribe to us wherever you listen to your podcasts so that you don’t miss an episode. We would love to hear from you. So do share your feedback either by dropping us a review or rating the podcast where podcast apps allow you to talk about it on social media. We are using the hashtag duck podcast. It really helps get the word out there. Most of all, if you found some useful information that might help a friend or family member, share the episode with them. A special thanks to our production team at made in India our production head Nikitina K edited, mixed and mastered by Lakshman Parashuram and project supervision by Sean Fanthorpe.

RECENT UPDATE

Judicial discipline or lack thereof in NCLT and NCLAT

Webinar on Fast-tracking M&A Approvals and the NCLT

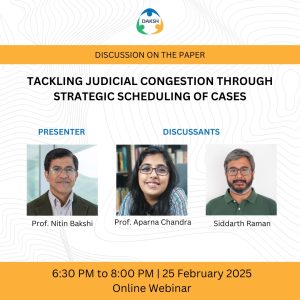

Webinar on Tackling Judicial Congestion Through Strategic Scheduling of Cases

-

Rule of Law ProjectRule of Law Project

-

Access to Justice SurveyAccess to Justice Survey

-

BlogBlog

-

Contact UsContact Us

-

Statistics and ReportsStatistics and Reports

© 2021 DAKSH India. All rights reserved

Powered by Oy Media Solutions

Designed by GGWP Design